PROBLEM STATEMENT

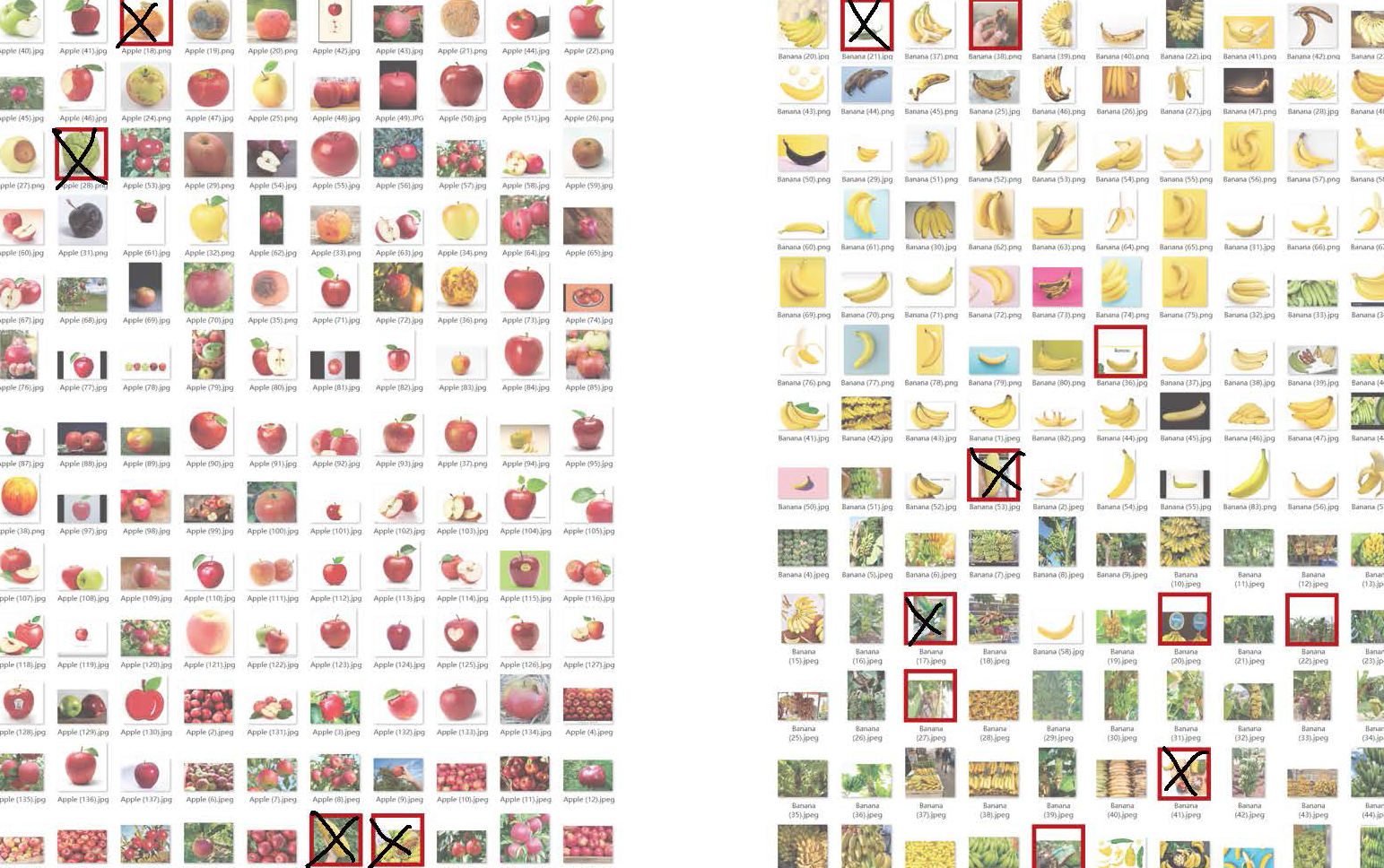

Outlier Problems:

Large datasets with unexpected outliers: Hard to find but may have huge effect on prediction result.

Webcam intruder from collection process: Consecutive capture make the removal process difficult. Human-introduced diversity:

Human can't precisely control the level of outlier insertion, which may impede the prediction accuracy.

Current Teachable Machine Design Issue:

Teachable Machine takes all input as training data but has no ability to recognize outlier from the dataset.

Prediction detail hides too deep for easier understanding of users without ML background.

Design Implications:

User can recognize outlier if we introduce human-in-the-loop methodology.

Simple prediction detail with proper explanation can help user find out the potential outlier’s influence.

RESEARCH GOALS

How might we give feedback to let the user learn how would the outlier affect the accuracy of the trained model and thus provide a higher-quality training sample?-

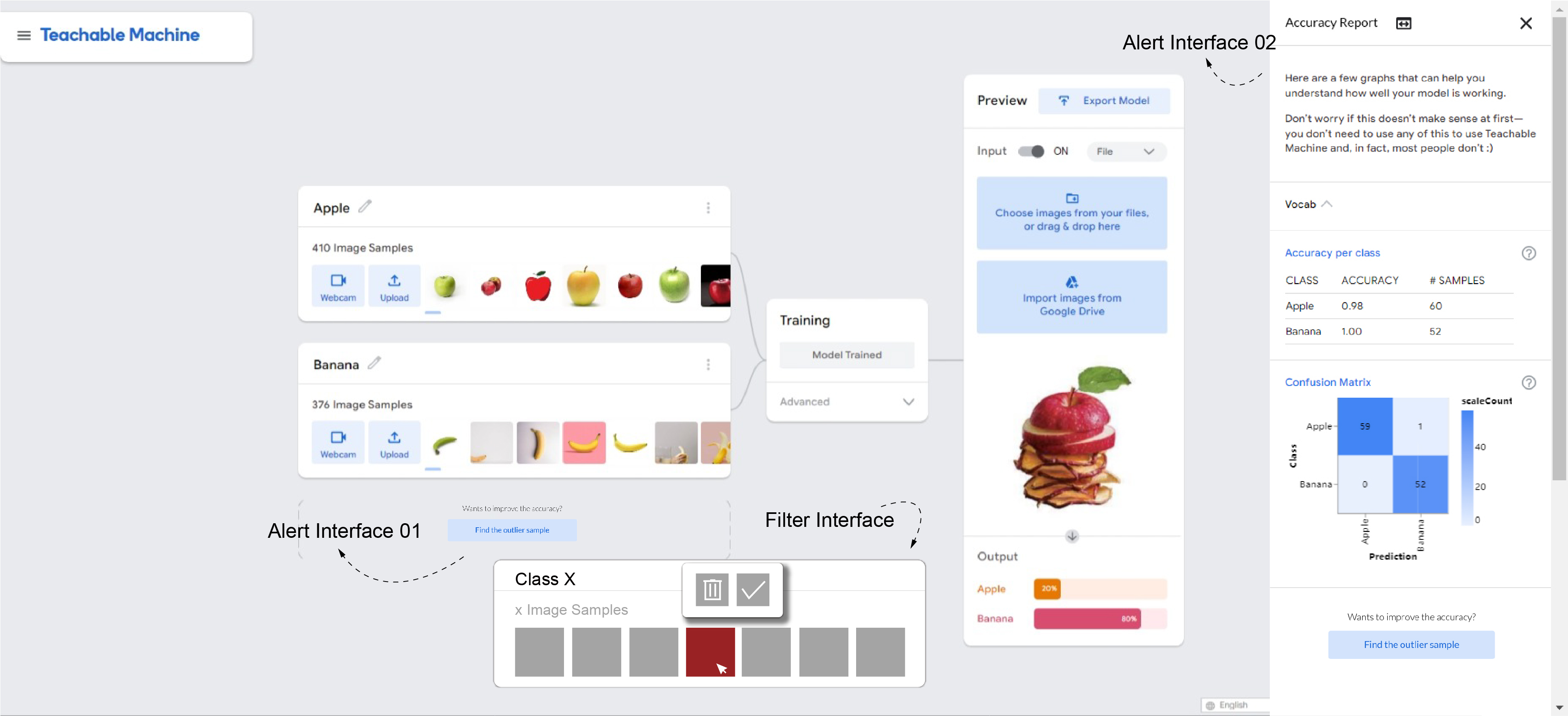

(RQ1): How could the interface alerts people that they might have accidentally introduced outlier into training dataset?

-

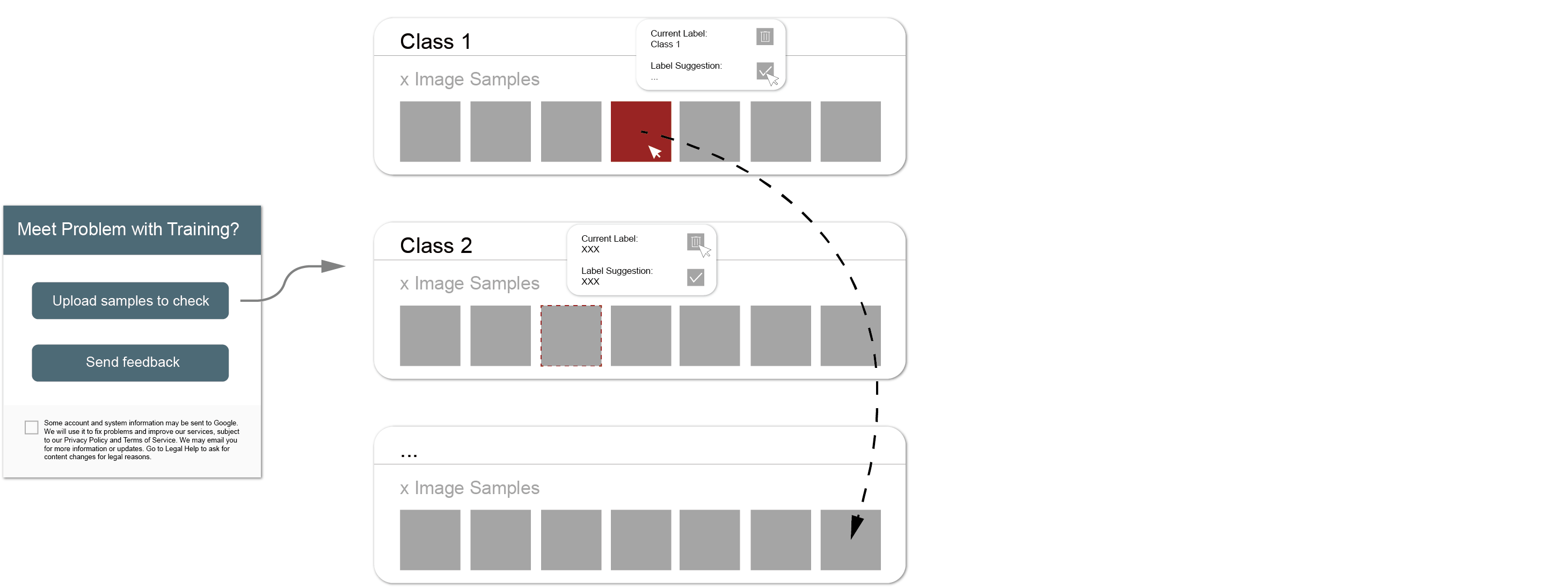

(RQ2): How could the interface guide the user effectively filter the outlier suggestions from the Teachable Machine algorithm?

PROTOTYPE DESIGN

Initial Solution 01: Human supervised classification

Initial Solution 01

Initial Solution 02: Passive outlier identification and correction

Initial Solution 02

Final solution: "Alert" Interface and "Outlier filter" Interface.

Final Solution

PROTOTYPING SESSION

I tested the prototype on 5 participants. 1 participant comes from CS background, 2 from design background (architecture), and 2 from interdisciplinary background (HCI & cognitive science/management). Most of them have basic knowledge of machine learning or statistics.

Round 1: Tutorial (Control Group)

Let participants run TM and observe how the current dataset works regard to classifying the designated samples.

Choose the favorite alert system among two alternatives as the test prototype of outlier filter interface in the following rounds.

Round 2: Train new model with human-filtered training set

Let participants optimize the training set manually by removing the suggested outliers in the checklist.

Run TM again on new dataset and observe how the accuracy changes while bearing the outlier pattern in mind.

Round 3: Apply new model with new training set

Let participants manually filter a new dataset based on the learned outlier pattern from Round 2, run TM again and observe how the accuracy changes.

After-session interview and questionnaire

Lo-fi Paper Prototype

Checklist Template

QUANTITATIVE ANALYSIS

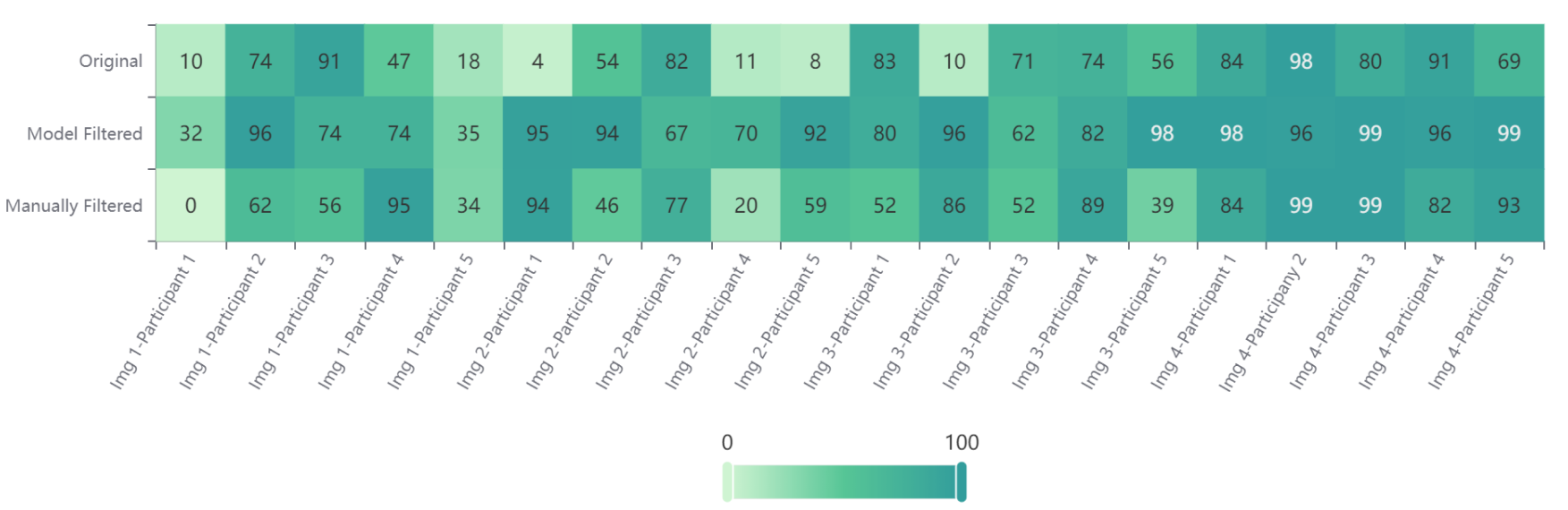

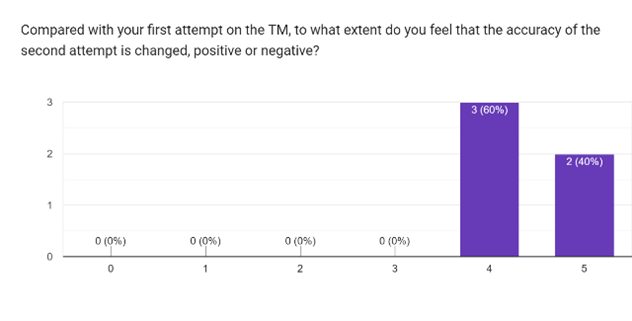

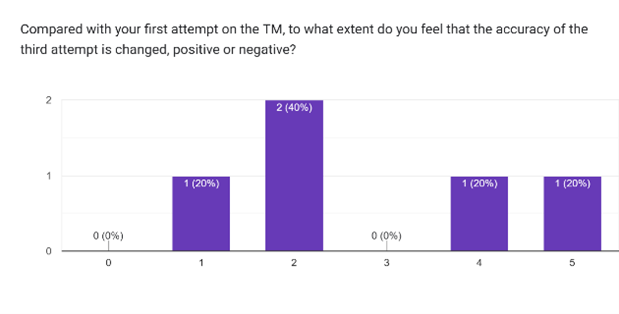

The chart below visualizes the prediction accuracy of sample images in 3 rounds for 5 participants. The suggested round (model filtered) has the highest accuracy. But compared with the initial round, the accuracy of manually filtered one has significantly improved due to potential pattern learning from outlier suggestion function.

Accuracy Result

QUALITATIVE ANALYSIS

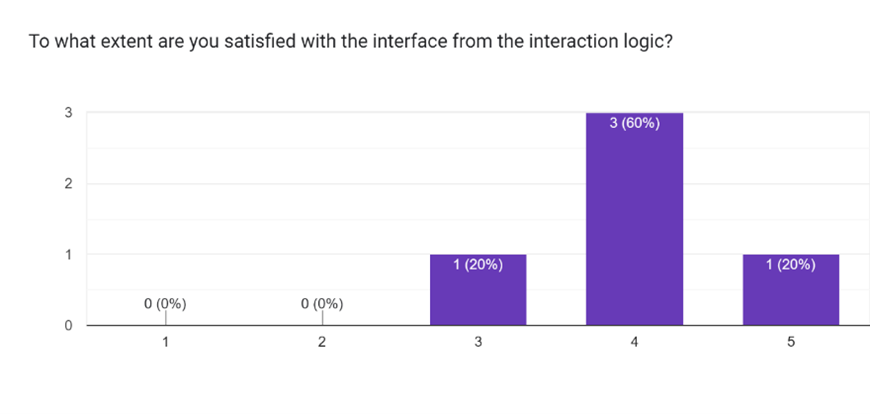

The survey and interview after prototyping session gave me more information beyond the prediction accuracy, participants expressed their finding, feeling and suggestion in this process, making design implication synthesis possible.

Questionnaire Result

Interface Design

For alert interface, the second interface is preferred by participants because:

-

They can see the accuracy from it and understand the intention of deleting outliers.

-

It encourages them to think about further optimization for better performance.

For outlier filter interface, participants attributed the better performance to the simple and clear design.

Other Findings

Participants express stronger curiosity to Teachable Machine's mechanism than expected. While they are willing to learn more about it, participants try to keep an interesting distance from the algorithm, round 3 result disappoints participants with professional backgrounds while they are confident about their judgment in round 2.

INSIGHTS AND FUTURE WORK

Insights

The “alert” interface stimulates their desire to learn what affect their prediction performance.

The “outlier filter” interface provides a possible paradigm for users to learn about how to distinguish outliers and what effect they have on the model.

More Research Questions

Participants' feedback, especially their confusions, also brought some interesting questions for further speculation, which includes:

-

Why Google puts “under the hood” into the advanced function?

-

How to balance human bias (users’ choice) and ML model bias (algorithm bias)?

-

How well could the filter system perform on unpredictable new datasets (generalization)?